Google’s Gemini Ultra Cost $191M to Develop ✨

What we’re showing

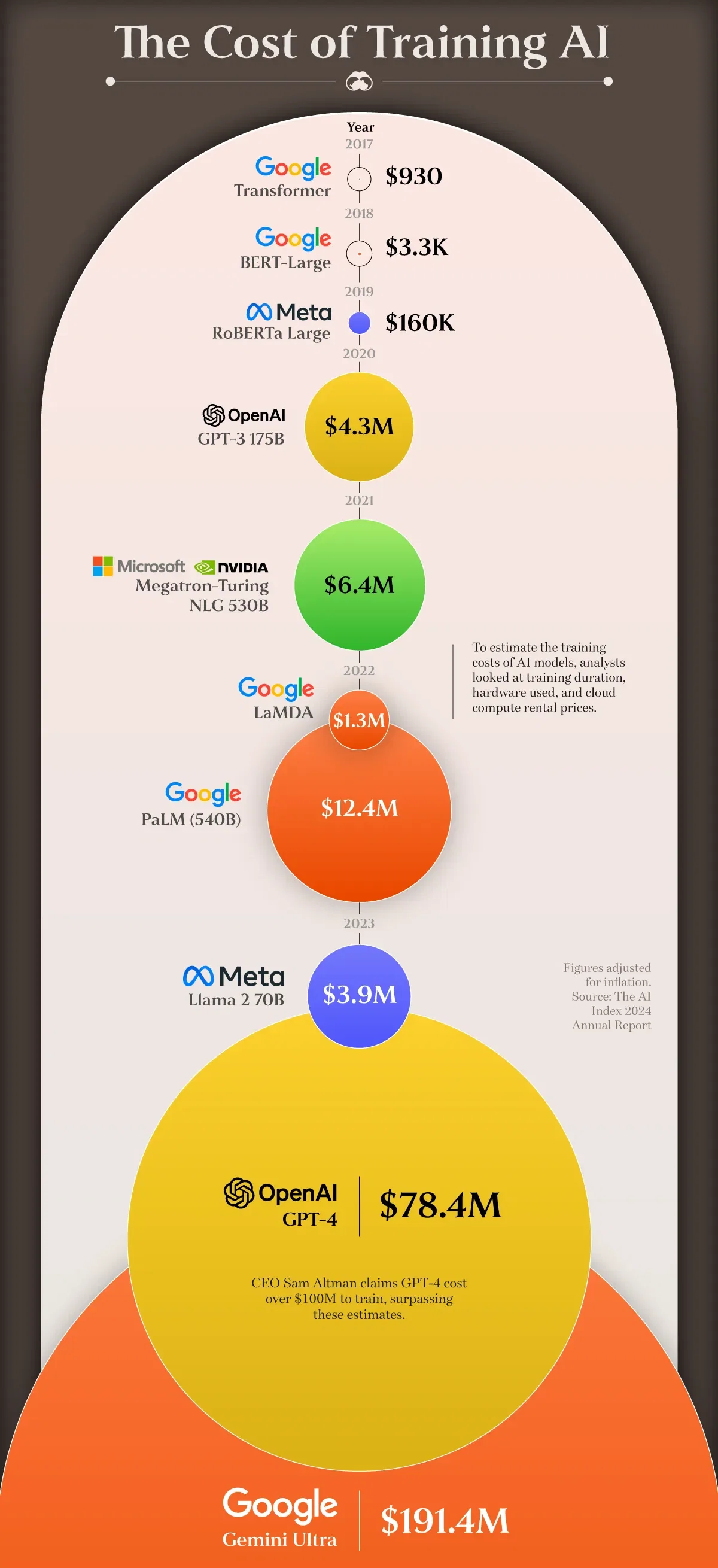

The estimated training costs of select AI models, based on analysis from AI research institute, Epoch AI. Analysts took into account numerous variables including training duration, hardware used, and cloud compute rental prices.

This data was accessed via Stanford University’s 2024 AI Index Report.

Transformer

Google’s Transformer model from 2017 cost $900 to train, which according to the report, “introduced the architecture that underpins virtually every modern large language model (LLM)”.

Gemini Ultra is the most expensive model to date

Google’s Gemini Ultra is believed to have costed a staggering $191 million to develop. This is due to the relationship between cost and computational power.

For example, Gemini Ultra required 50 billion petaFLOPS (one petaFLOP equals one quadrillion FLOPs). OpenAI’s GPT-4, which required 21 billion petaFLOPS, cost $78 million.

A higher petaFLOP value generally indicates faster processing capabilities, allowing for quicker training times or the ability to handle larger models and datasets.